About Enlights

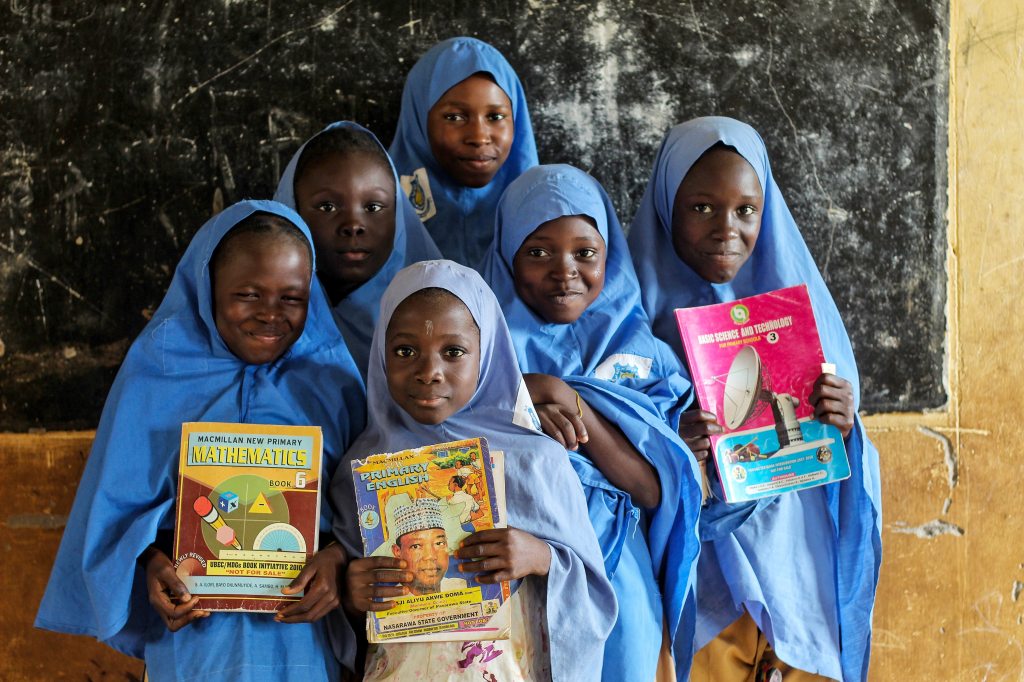

Enlights is a nonprofit that distributes educational supplies to youth in underserved schools worldwide, to empower youth to maximize their potential in pursuing education and future career opportunities.

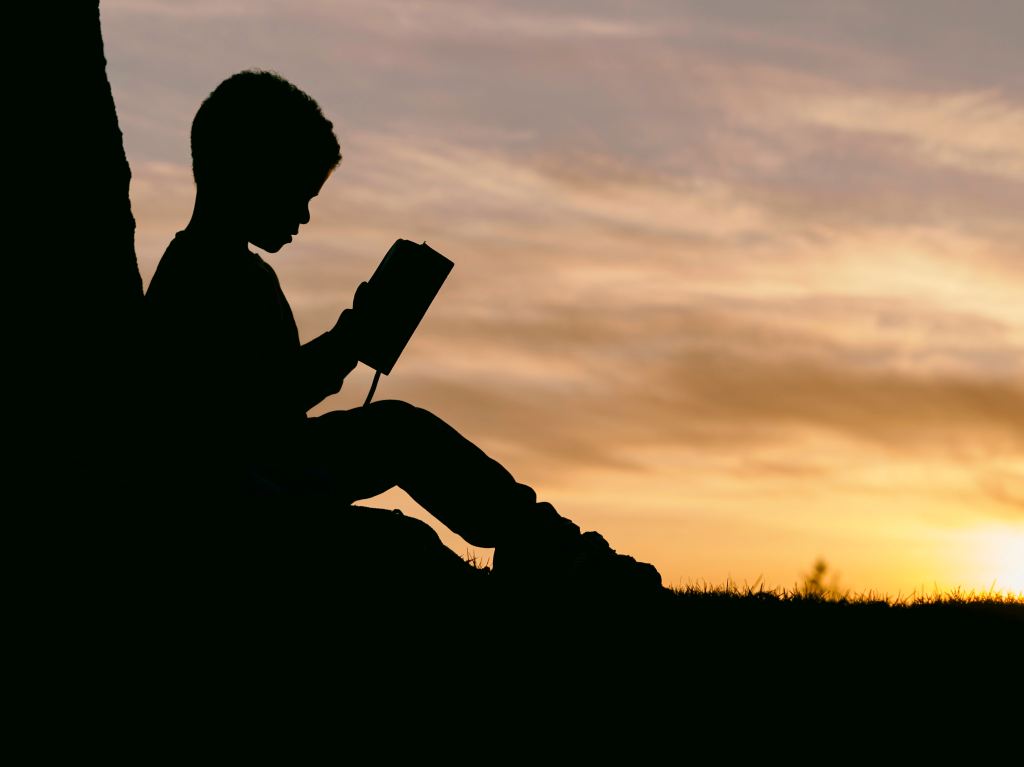

“Enlight” means to enlighten, to light or shed light upon, illuminate, furnish knowledge to and instruct.

“Education is not the filling of a bucket, but the lighting of a fire.”

W. B. Yeats

Founders

Matthew Persky has always believed that education is an important pillar in uplifting sustainable communities. Since he was little, he has visited under-resourced areas around the world and became interested in improving access to resources. During his visit at an orphanage in Vietnam the summer after 2nd grade, he noticed that the children did not have many educational materials. After that, he has personally distributed educational resources to Latin American schools for special needs children and youth who escaped from violence, abuse, and exploitation. He has also distributed resources to African, Central Asian, and other communities worldwide through charity organizations he partners with.

Matthew believes that quality education is fundamental to financial security, family stability, and ultimately, cohesive and prosperous communities. At age 11, he built a full-scale wooden shelter for the unhoused and filmed an educational video to spread awareness about poverty and education. Later, he founded a nonprofit, The Equality Collective, to empower people by addressing inequalities in housing, health, and education, which often lead to inequalities in employment, income, and access to opportunities.

Enlights is one of the initiatives of The Equality Collective to provide resources to underserved communities worldwide, promoting equality and climate action. The Equality Collective has recently distributed resources to Eastern European refugees and victims of natural disasters in the Middle East. Matthew believes that everybody, regardless of their differences, should have an equal chance and opportunity to learn, succeed and achieve their dreams.

As a child of an immigrant, Daniel Persky visited Latin America and Southeast Asia, where he empathized with underserved youth who had few resources to survive, let alone thrive. Daniel founded The Equality Collective, which has various initiatives that champion equality and sustainability. The initiatives all work together to promote diversity, equality, and inclusion in education, exercise, health, and fitness, so that individuals in under-resourced communities can achieve optimal performance and enjoy greater access to career opportunities and higher quality of life.

Join us

Thank you for joining us

We believe in promoting equal opportunities and improving access to quality education.

If you have educational resources to donate to underserved youth, please contact us.

Enlights is a subsidiary of The Equality Collective.

Info@EqualityGlobal.org

(650) 308-8855

“Nothing can dim the light that shines from within.”

Maya Angelou

Thank you for joining us in lighting the way.